How Russia uses AI-generated images to spread propaganda

Russian disinformation campaigns are actively using social media, posing as Ukrainian groups and pages. Their goal is to first gain the audience’s trust, then spread pro-Russian messages, divide society, or create isolated information bubbles

Espreso TV spoke with Ihor Rozkladay, an expert on this topic, for more details.

The main goal is clickbait

According to Ihor Rozkladay, this can be considered clickbait on a global scale. But what’s the purpose?

"First, some pages promote Chinese sects. Second, many pages are managed from various countries, including the U.S., Indonesia, and others. In these cases, the main goal is to attract an audience," he explains.

Categories of AI-generated images on social media

There are many AI-generated images on social media, and they can be grouped into different categories. Ihor Rozkladay highlights some of the most common types:

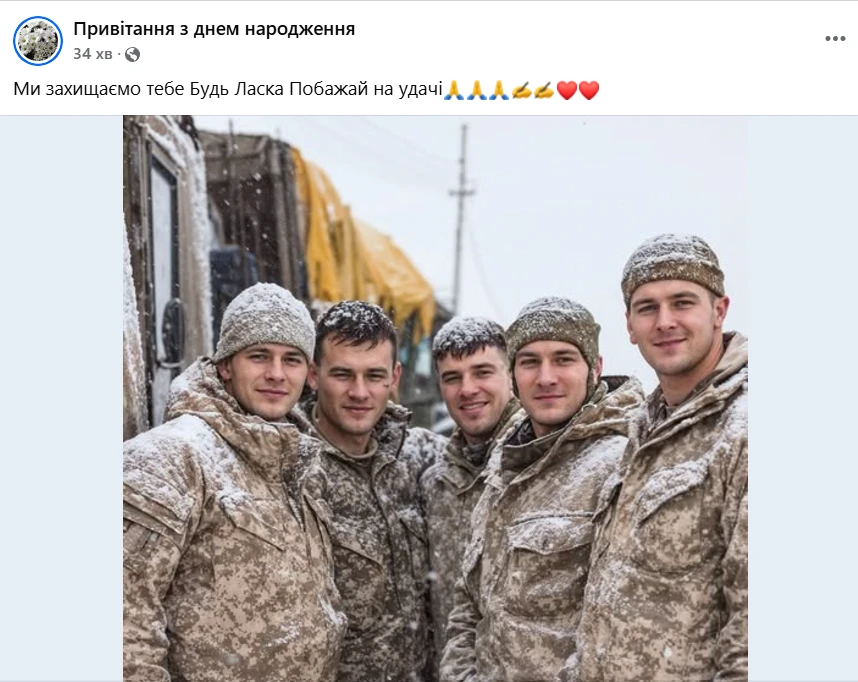

The first category is "tearful" content. These are posts like "No one congratulated me," "Me and my grandmother," or "I’m an orphan." The goal is to stir emotions and get likes from people who sympathize with these "unfortunate" situations.

"I call pages like these 'the naked singer no one likes,'" he says. "This format is often spread by group admins from Armenia, and there are suspicions that Russians might be behind it. Interestingly, these pages often use AI-generated images of military personnel, like scenes of them sitting at a table, hugging, or similar setups."

The second category is family or reproductive content. These include posts like "We have four or six kids" or "We got married today, wish us happiness." The goal here is also to evoke emotions from the audience.

The third category focuses on people with physical disabilities, such as amputations. This format was especially popular in the United States, and sometimes real photos are used for these posts.

The fourth category is “How good I am at somethings.” These posts feature stories about someone doing something amazing, like carving a horse or an entire city out of wood. While many of these stories are clearly fake, they are presented in a way that makes them seem like real achievements. Examples include “My dad is a genius” or “Look at how smart this boy is.”

There are also other categories, like baking, which often tie into birthday themes. This category is quite large.

Russians testing algorithms on Ukrainians

As with anything related to information, these pages can be dangerous due to the high potential for manipulation by Russians. Ihor Rozkladay has noticed that political content often appears on social media pages using AI-generated images for clickbait. They also add YouTube videos and other similar materials.

"But there's another trend that I believe is more about the Russians' attempts to test or adapt to algorithms. For example, when I started interacting with these pages, I was recommended others. Most were American or foreign pages, but they focused on the Russian military. They posted content about Russian planes or military equipment with captions like 'powerful weapons' and so on."

According to the expert, there’s a category of pages that publish provocative content, including political posts. For example, they might take a photo of Maidan in Kyiv or the Odesa coastline and add provocative captions like "Crimea is Russia." This triggers an emotional response, helping the page spread through user interactions.

“For instance, I started seeing pages like 'Moscow' in my recommendations, showing girls in front of Red Square. When I looked closer, I noticed mistakes — like inaccurate building locations and mismatched details. This is a typical example of how fake pages are created to promote Russian propaganda,” he says.

How it works

Algorithms follow their own logic, which is, of course, a trade secret. This is how platforms make money, so no one can fully explain how they work.

“However, it’s clear that the Russians and others are trying to study these algorithms and mimic certain behaviors. For example, if you start interacting with a particular type of content, they’ll begin promoting similar content to you. I had an experience where I once clicked on a medical-related ad. I don’t remember exactly how it happened — maybe I was sick and searching for something. Now, fraudulent ads constantly appear in my feed. For instance, some ‘news’ about Dr. Todurov being involved in a scandal or something like that. These are obvious fakes, but I keep seeing ads like this,” says Ihor.

How to interact with such content

We live in a time when it’s increasingly hard to tell fact from fiction, and artificial intelligence tools are being used for manipulation. Our goal should be to stay alert, think critically, and avoid falling for provocations.

"As a researcher, I have to study these pages, but I strongly advise regular users not to engage with this content. Likes, comments, or any form of interaction only help spread it," says Ihor Rozkladay.

Unfortunately, reporting such posts is usually ineffective.

"Platforms often don't respond to complaints if the content doesn’t violate their community guidelines. Even provocative captions or fake news can stay online if they don’t technically break any rules. The best strategy is to simply ignore them and avoid engaging," the expert adds.

- News